[DL] Network Initialization

Optimizing deep learning model is a significanlty difficult that are strongly affected by the initial point. When the number of layers is going deeper and deeper, the optimization curve is more likely to ill-conditioned and backpropagation usually fails, for example, due to gradient vanishing/exploding.

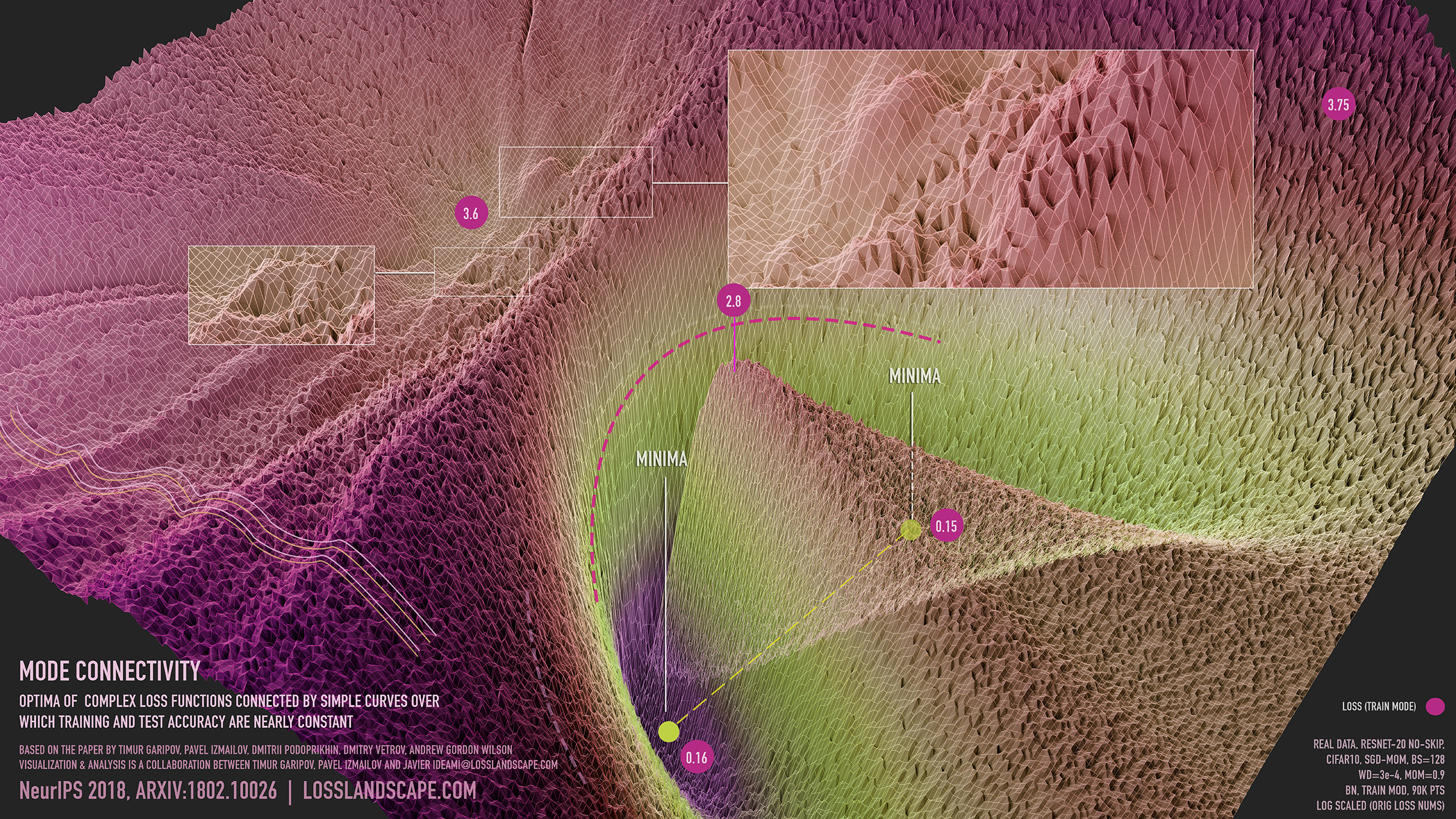

$\mathbf{Fig\ 1.}$ Complex loss surfaces

And we want to select the initial point well, and also retrain the scale of each activation in the network not to be too large or too small for our initial randomized weights, so that the gradients propagate well.

Hence in this post we will delve into some techniques called weight initializations which ensure that activations and parameters are on a reasonable scale, and the scale doesn’t grow or shrink in later layers as the network grows deeper. But poor initialization may facilitate vanish/exploding gradient problem and cause settling down on a bad local minimum. So we need a good weight initialization.

Xavier Initialization (Glorot & Bengio, 2010)

Consider a single linear layer with weight $W$ and input $\mathbf{x} \in \mathbb{R}^{n_{\text{in}}}$. Suppose $\mathbf{x}_i \sim \mathcal{N}(0, \sigma_X^2)$ and a bias $\mathbf{b} \approx \mathbf{0}$. Gaussian assumption for input is somewhat reasonable as it is usually normalized by data preprocessing or batch normalization, etc.

Let $W \sim \mathcal{N}(0, \sigma_W^2)$ and $n_{\text{out}}$ be the number of output units. Then, if we forward propagate $\mathbf{x}$ and get the output $\mathbf{y}$,

\[\begin{aligned} \mathbf{y}_i = \sum_{j = 1}^{n_{\text{in}}} W_{ij} \mathbf{x}_j + \mathbf{b} \sim \mathcal{N}(0, n_{\text{in}} \sigma_W^2 \sigma_X^2) \end{aligned}\]To retain the scale of activations, it is reasonable to choose $\sigma_W^2 = \frac{1}{n_{\text{in}}}$. And it is known as Xavier (Glorot) initialization, or Xavier Glorot initialization. To be more accurate, we need some further steps for the original Xavier Glorot initialization proposed in [3]. However, Caffe library adopts this variance for implementation [2].

Let’s go through the equivalent steps for the backpropagation side. Then, $\sigma_W^2$ needs to be $\frac{1}{n_{\text{out}}}$ for our intention. But in the general case, $n_{\text{in}}$ and $n_{\text{out}}$ may not be equal, thus so as a sort of compromise, Glorot and Bengio suggest using the average of them and proposed

\[\begin{aligned} \text{Var}(W) = \frac{2}{n_{\text{in}} + n_{\text{out}}} \end{aligned}\]In short,

- Xavier Glorot Normal initialization

$W \sim \mathcal{N}(0, \text{Var}(W)) \text{ where } \text{Var}(W) = \frac{2}{ n_{\text{in}} + n_{\text{out}}}$.

As the variance of $U(a, b)$ is $\frac{1}{12} (b - a)^2$, the uniform initialization is determined as follows:

- Xavier Glorot Uniform initialization

$W \sim U(-\sqrt{\frac{6}{ n_{\text{in}} + n_{\text{out}}}}, \sqrt{\frac{6}{ n_{\text{in}} + n_{\text{out}}}})$.

These initialization work well for $\text{tanh}$, sigmoid, and softmax. Using Glorot initialization can speed up training considerably, and it is one of the tricks that led to the success of Deep Learning.

$\mathbf{Fig\ 2.}$ Without Xavier Initialization

$\mathbf{Fig\ 3.}$ With Xavier Initialzation

He Initialization (He et. al. 2015)

At the first step, so as to retain the scale of activations, we see that it is reasonable to choose $\sigma_W^2 = \frac{1}{n_{\text{in}}}$. But this was all without non-linearities: when the activation is fired by ReLU, negative half of $0$-mean activations is removed. Thus by dividing by $2$, we finally obtain an initialization called He Normalization proposed by He et al. 2015:

- He Normal initialization

$W \sim \mathcal{N}(0, \text{Var}(W)) \text{ where } \text{Var}(W) = \frac{2}{ n_{\text{in}} }$.

As the variance of $U(a, b)$ is $\frac{1}{12} (b - a)^2$, the uniform initialization is determined as follows:

- He Uniform initialization

$W \sim U(-\sqrt{\frac{6}{ n_{\text{in}} }}, \sqrt{\frac{6}{ n_{\text{in}} }})$.

Hence, these initializations work well for ReLU.

Reference

[1] Goodfellow, Ian, Yoshua Bengio, and Aaron Courville. Deep learning. MIT press, 2016.

[2] andy’s blog - An Explanation of Xavier Initialization

[3] Glorot, Xavier, and Yoshua Bengio. “Understanding the difficulty of training deep feedforward neural networks.” Proceedings of the thirteenth international conference on artificial intelligence and statistics. JMLR Workshop and Conference Proceedings, 2010.

[4] He, Kaiming, et al. “Delving deep into rectifiers: Surpassing human-level performance on imagenet classification.” Proceedings of the IEEE international conference on computer vision. 2015.

Leave a comment